When AI Learns to Listen: The Coming Era of Interspecies Communication

AI is rapidly evolving beyond human speech understanding—into decoding the hidden languages of animals. From whale clicks to elephant rumbles, this article explores how AI could soon bridge the gap between species and allow meaningful interspecies dialogue. Discover the science, ethics, and future of AI-powered animal communication.

When AI Learns to Listen: The Coming Era of Interspecies Communication

By Zahir Wani

TL;DR

AI is now learning to decode more than just human speech—it’s tapping into the acoustic, behavioral, and even neural signals of animals. The future of interspecies communication might be closer than we think, led by breakthroughs in AI sound analysis, brain-computer interfaces, and large language models trained on non-human data.

I. The Illusion of Human-Only Language

We’ve long assumed language is a uniquely human trait. But as technology begins to uncover structure in the way animals communicate—be it through sound, vibration, gesture, or color—we're beginning to question whether language has always been around us in forms we failed to recognize.

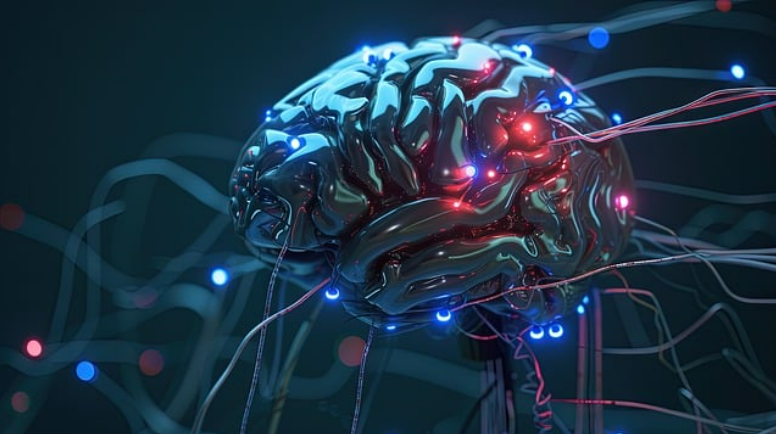

II. How AI Understands Sound Differently

AI models like OpenAI's Whisper or Google's audio transformers go far beyond simple transcription. These tools learn meaning from tone, rhythm, and semantic pattern—without relying on predefined grammar. When applied to whale songs, elephant rumbles, or birdcalls, they reveal something profound: a kind of logic. A syntax. A system.

Whales, for instance, produce regional dialects. Prairie dogs use warning calls with modifiers for predator size, shape, and speed. Elephants use low-frequency rumbles to communicate over long distances—some of which are seismic, not even auditory. AI models can extract these non-obvious patterns and begin to interpret them at scale.

III. Earth Species Project and Project CETI

Two of the most ambitious efforts in this space are:

Earth Species Project – Using transformer-based models to detect latent structure in animal communication, even without paired translations.

Project CETI – Applying AI to the codas (click patterns) of sperm whales, with early findings suggesting grammatical rules.

Both projects are betting on the idea that language, in essence, is pattern—and AI is designed to detect patterns beyond the limits of human intuition.

IV. From Sound to Thought: The Neural Layer

It’s not just sound. Brain-computer interface (BCI) research, such as Neuralink or UCSF's speech prosthetics, shows we can now decode internal neural signals into structured words—without the need for vocalization.

If this tech advances into animal research, it may be possible to correlate brain states with intent or emotion. For example, if an elephant is agitated, we may not only hear it—we may soon map that emotional pattern to a data model that interprets “urgency,” “distress,” or even “curiosity.”

V. The Ethical Cliff Edge

With power comes responsibility. If AI does help us decode animal language:

What if we misunderstand them?

Should intelligent animals be granted rights?

Could we exploit their understanding of us?

History has shown that when humans gain tools of power, misuse often follows. A new layer of ethics must emerge alongside these breakthroughs.

VI. Search Engines, Semantic Layers, and Animal Speech

Just as Google or ChatGPT learns to understand user intent beyond exact words, similar architectures may now apply to animals. These models detect latent meaning—like sarcasm, sadness, or stress. This gives us a roadmap for decoding non-human signals.

Imagine AI that understands “emotional grammar,” not just symbolic language. That’s where we’re headed.

VII. Final Thoughts

AI is on the verge of granting us a privilege we’ve never had: the ability to listen—really listen—to the natural world. Not just observe behavior, but understand thought. If this succeeds, the line between “us” and “them” dissolves. We become one of many speaking species.

Further Reading & References

Earth Species Project

Project CETI

OpenAI Whisper Model (2022)

National Geographic: “The Secret Language of Whales” (2023)

Slobodchikoff, C.N. Chasing Doctor Dolittle

Google DeepMind’s AudioLM project

Would you like me to generate a banner image prompt or help with a Linked

In teaser or tweet to promote this article?

Tools

Comments (0)

Please login to leave a comment.